Intro to Transformer Models

Literature

Notebooks - Basics

Notebooks - Applications

- TM Applications - SBERT

- TM Applications - HF

- Simple transformer LM

- SBERT for Patent Search using PatentSBERTa in PyTorch

Notebooks - FineTuning

- TM FineTuning - SimpleTransformers

- TM FineTuning - SBERT

- TM FineTuning - HF

- SetFit Hatespeech vs bert and distilroberta

- Seq2Seq - Neural Machine Translation

Slides - Attention Mechanism

Slides - SBERT

Classification with various vectorization approaches

- TF-IDF and W2V Multi-Class Text Classification

- BERT Multi-Class Text Classification

- Implementing Multi-Class Text Classification LSTMs using PyTorch

Resources

- OG SBERT-Paper Reimers, N., & Gurevych, I. (2019). Sentence-bert: Sentence embeddings using siamese bert-networks. arXiv preprint arXiv:1908.10084.

- SBERT Docu

- NLP with SBERT - an ebook/course on the use of dense vectors (with SBERT for business applications)

- SBERT-Training Tutorial

- BERTopic - a framework for topic modelling with SBERT embeddings

- Milvus - Vector database

| Technique | Inventors & First Introduction (Year) | Key / Best-Known Paper (Year) | Notes / Additional Information |

|---|---|---|---|

| Single Neuron / Perceptron | - Frank Rosenblatt: “The Perceptron: A Probabilistic Model…” (1958) | - Minsky & Papert: Perceptrons (1969) | - Rosenblatt’s perceptron was one of the earliest neural network models. - Minsky & Papert (1969) highlighted its limitations, driving interest in multi-layer networks. - Laid the foundation for feedforward neural networks. |

| Multilayer Perceptron (MLP) | - Paul Werbos: (PhD Thesis, 1974) introduces backpropagation in theory | - Rumelhart, Hinton, Williams: “Learning Representations by Back-Propagating Errors” (1986) | - Werbos’s thesis was not well-known initially. - Rumelhart et al. (1986) popularized backpropagation, spurring the first wave of deep learning in the late 1980s–early 1990s. - MLPs are fully connected feedforward networks used in classification, regression, etc. |

| Recurrent Neural Network (RNN) | - John Hopfield: Hopfield Network (1982) introduced a form of recurrent computation | - Jeffrey Elman: “Finding Structure in Time” (1990) | - Hopfield networks (1982) are energy-based recurrent models. - Elman networks showed how to capture temporal or sequential patterns (language, time-series). - Other early RNN variants: Jordan networks (1986). |

| LSTM (Long Short-Term Memory) | - Sepp Hochreiter & Jürgen Schmidhuber: “Long Short-Term Memory” (1997) | - Gers, Schmidhuber, Cummins: “Learning to Forget…” (1999) - Greff et al.: “LSTM: A Search Space Odyssey” (2015) | - LSTMs introduced gating mechanisms (input, output, forget gates) to tackle vanishing/exploding gradients. - Subsequent refinements (peephole connections, GRUs, etc.) improved sequence modeling. - Achieved state-of-the-art performance on speech recognition, language modeling, and more. |

| CNN (Convolutional Neural Network) | - Kunihiko Fukushima: Neocognitron (1980) | - Krizhevsky, Sutskever, Hinton: “ImageNet Classification with Deep Convolutional Neural Networks” (2012, a.k.a. AlexNet) | - Neocognitron was a precursor to modern CNNs. - Yann LeCun refined CNNs in the late 80s–90s (e.g., LeNet for digit recognition). - AlexNet’s success in 2012 sparked the modern deep learning revolution. - CNNs became fundamental for image classification, detection, segmentation, etc. |

| Deep Belief Networks (DBN) | - Geoffrey Hinton, Simon Osindero, Yee-Whye Teh: “A Fast Learning Algorithm for Deep Belief Nets” (2006) | - Same as first introduction paper; extended in subsequent works by Hinton et al. | - DBNs stack Restricted Boltzmann Machines (RBMs) to learn hierarchical representations. - One of the earliest successful “deep” models, trained greedily layer-by-layer. - Revitalized interest in deep learning prior to the CNN breakthrough. |

| Autoencoders | - Concept by Rumelhart, Hinton, Williams in the 1980s (parallel to MLP research), though not labeled “autoencoder” initially | - Vincent et al.: “Stacked Denoising Autoencoders” (2010) | - Autoencoders learn latent representations via reconstructing their inputs. - Variants: denoising autoencoders, sparse autoencoders, contractive autoencoders. - Used for unsupervised/self-supervised pretraining, dimensionality reduction, feature learning, etc. |

| GNN (Graph Neural Network) | - Marco Gori, Gabriele Monfardini, Franco Scarselli: “A new model for learning in graph domains” (2005) | - Kipf & Welling: “Semi-Supervised Classification with Graph Convolutional Networks” (2016) | - Scarselli et al. (2009) formalized the GNN framework. - Kipf & Welling’s GCN (2016) popularized graph convolution, spurring a wave of GNN research (GraphSAGE, GAT, etc.). - Used for social networks, molecule property prediction, recommendation systems, and more. |

| Variational Autoencoder (VAE) | - Diederik P. Kingma & Max Welling: “Auto-Encoding Variational Bayes” (2013/2014) | - Rezende, Mohamed, Wierstra: “Stochastic Backpropagation…” (2014) | - VAEs introduce a latent-variable generative framework built around autoencoders. - Widely used for image generation, anomaly detection, representation learning. - Probabilistic approach to learning continuous latent spaces. |

| Generative Adversarial Network (GAN) | - Ian Goodfellow et al.: “Generative Adversarial Nets” (2014) | - Radford, Metz, Chintala: “Unsupervised Representation Learning with Deep Convolutional GANs” (DCGAN, 2016) | - GANs involve a generator and discriminator in a minimax game. - DCGAN popularized stable training and improved image generation quality. - Countless variants (WGAN, StyleGAN, CycleGAN) used for high-fidelity image synthesis, domain translation, etc. |

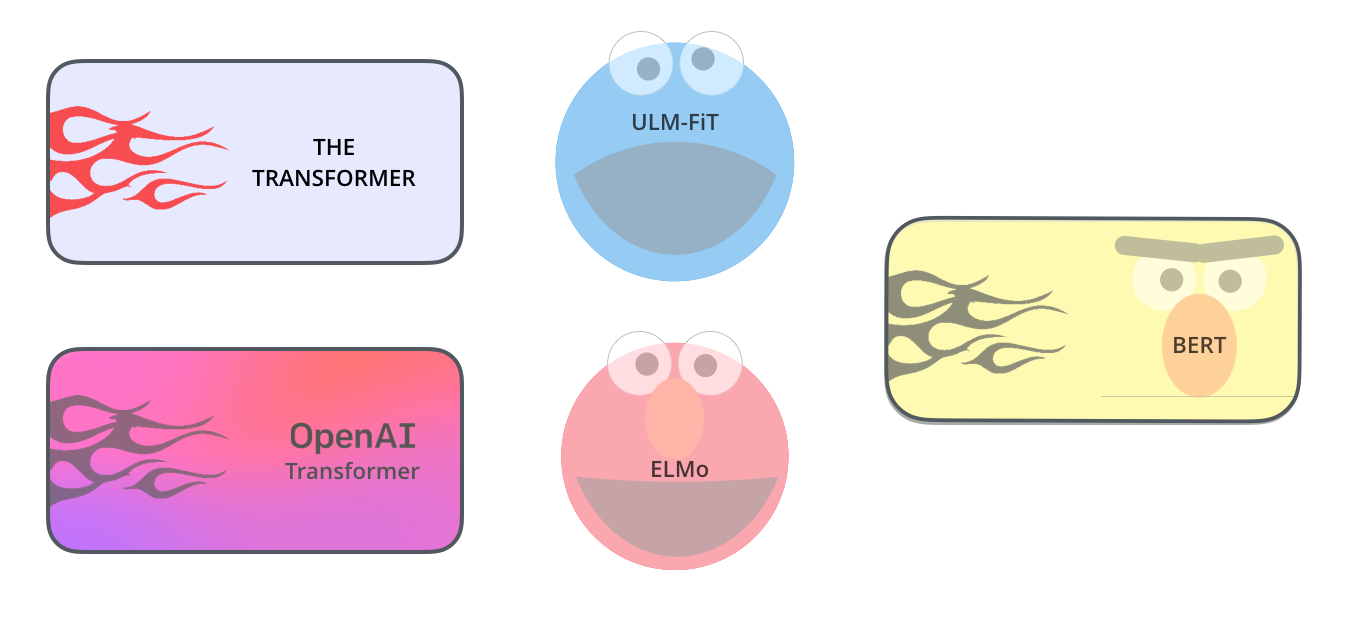

| Transformer | - Vaswani, Shazeer, Parmar, Uszkoreit, Jones, Gomez, Kaiser, Polosukhin: “Attention Is All You Need” (2017) | - Devlin et al.: “BERT: Pre-training of Deep Bidirectional Transformers…” (2018) - Brown et al.: “Language Models are Few-Shot Learners” (GPT-3) (2020) | - The Transformer removed recurrence and convolutions, relying on self-attention for sequence processing. - BERT, GPT, T5, and other large Transformer models achieve state-of-the-art in NLP and beyond. - Adaptations exist for vision (ViT), audio, multimodal tasks, etc. |